We’re living in the era of Gen AI, and generative adversarial networks (GANs) are among the invaluable driving forces behind this technology. Read on to learn how GANs are unlocking new frontiers in AI creativity, which are almost indistinguishable from human creative outputs.

What is Generative Adversarial Networks

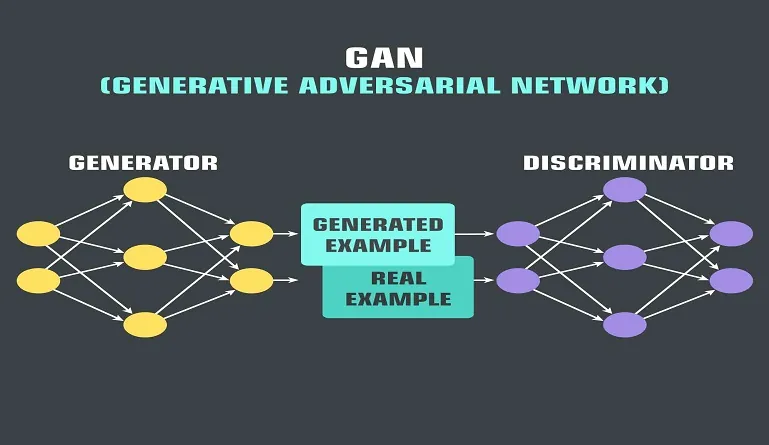

Generative adversarial networks, or GANs, are a class of artificial intelligence algorithms that involve two neural networks, the generator and the discriminator, engaged in strategic competition. The generator crafts synthetic data samples, while the discriminator distinguishes between genuine and generated data.

Through this adversarial process, GANs achieve remarkable results in generating highly realistic and diverse data, making them a promising avenue for AI creativity and innovation.

Generative Adversarial Networks Architecture: 6 GAN Components

The architecture of a generative adversarial network comprises:

1. Generator

The generator is the architect of synthetic data. It takes random noise as input and transforms it into data samples that ideally resemble real data from the training set. The Generator consists of layers of neural networks that learn to map the input noise to the desired output data distribution.

2. Discriminator

The Discriminator serves as the critic in the GAN framework. It examines data samples and determines whether they are real (from the training set) or fake (generated by the Generator). Similar to the Generator, the Discriminator comprises neural network layers that learn to classify input data as real or fake.

3. Adversarial training loop

The heart of the GAN architecture lies in the adversarial training loop. During training, the Generator and the Discriminator engage in a competitive game where the Generator tries to fool the Discriminator by generating increasingly realistic data. At the same time, the Discriminator aims to accurately differentiate between real and fake data. This adversarial dynamic drives both networks to improve their performance iteratively.

4. Loss functions

GANs rely on specific loss functions to guide the training process. The generator seeks to minimize loss by generating data that the discriminator categorizes as accurate. Conversely, the discriminator aims to reduce loss by correctly classifying real and fake data samples. Standard loss functions used in GANs include the binary cross-entropy loss for classification tasks.

5. Training data

GANs require a dataset of actual data samples to learn from during training. This dataset is the benchmark against which the Generator’s output is evaluated. The quality and diversity of the training data play a crucial role in determining the performance of the GAN model.

6. Optimization algorithm

GANs employ optimization algorithms, such as stochastic gradient descent (SGD) or its variants like Adam, to update the parameters of the generator and discriminator networks during training. These algorithms adjust the network weights to minimize the respective loss functions and improve the overall performance of the GAN.

How Do GANs Work? Generative Adversarial Networks Explained

The working principle of a generative adversarial network (GAN) can be compared to a sort of “creative duel” between two opponents, the generator and the discriminator.

On one side, the generator kicks off with random noise as its canvas and then uses a series of intricate neural network layers to transform that noise into something resembling authentic data—whether it’s images, text, or even sound waves.

The discriminator is armed with the ability to scrutinize data samples and detect any hints of being fake. Its mission is to tell the genuine data apart from the artificial datasets churned out by the generator. With rigorous model training, it learns to distinguish the subtle nuances that separate factual data from the generator’s imitations.

Through this adversarial interplay, the generator and the discriminator gradually refine their abilities until they reach a delicate equilibrium. At this point, they have mastered the art of deception, generating data so authentic that even the discriminator struggles to tell it apart from reality. Thus, the GAN achieves its ultimate objective: to drive AI creativity almost akin to human cognitive levels.

The Significance of GANs in AI

Unlike traditional generative models, which often struggle to capture the intricacies of high-dimensional data distributions, GANs excel at generating data with remarkable fidelity and diversity, making them a cornerstone of AI creativity and innovation.

Its uniqueness lies in your ability to harness the power of adversarial learning to push the boundaries of artificial intelligence. Traditional models typically rely on predefined objective functions and heuristics to optimize performance, limiting their flexibility in capturing the underlying structure of complex data distributions.

In contrast, GAN’s adversarial architecture fosters a dynamic learning process where the generator and discriminator continuously adapt and improve through adversarial feedback. This dynamic interplay enables it to generate highly realistic data and enhances its resilience to adversarial attacks and data perturbations.

5 Surprising Applications of GANs

Generative AI and GANs are instrumental in AI-driven content creation, but the technology also has a variety of other applications. These include:

1. Data augmentation

GANs offer a powerful tool for augmenting training datasets by generating synthetic samples. This augmentation strategy enhances the diversity and size of datasets – thereby improving the generalization and robustness of machine learning models trained on limited data.

2. Super-resolution imaging

GANs are employed in super-resolution imaging tasks to enhance the resolution and quality of low-resolution images. Through adversarial training, GANs learn to generate high-resolution images from low-resolution inputs, enabling medical imaging, satellite imagery, digital photography, and law enforcement applications.

3. Anomaly detection

GANs can be employed for anomaly detection tasks by learning the underlying data distribution of standard samples. During training, the Generator learns how to produce samples representing the normal distribution, while the Discriminator identifies anomalies as deviations from the normal distribution. This application is used in various domains, such as cybersecurity, fraud detection, and fault diagnosis.

4. Domain adaptation

GANs facilitate domain adaptation by learning to translate data distributions from a source domain to a target domain. Through adversarial training, GANs can map samples from one domain to another while preserving their semantic content. This application is beneficial in tasks such as image-to-image translation, where images captured in one domain (e.g., daytime) are transformed into another domain (e.g., nighttime).

5. Data privacy and generation

GANs support data privacy and generation by learning generative models from sensitive or limited data sources. Instead of directly sharing sensitive data, GANs can generate synthetic data samples that preserve the statistical properties of the original data while ensuring privacy and anonymity. This approach finds applications in healthcare, finance, and other sectors where data privacy is paramount.

Challenges and Limitations

While GANs are central to creative AI processes, you must know their challenges as you delve deeper into their applications.

One significant challenge you might encounter is training instability. During the adversarial training, oscillations can occur where the generator fails to capture the entire data distribution. This instability could impede convergence and make training GANs feel like navigating choppy waters.

Also, you might encounter the issue of mode dropping. This occurs when the generator needs to include specific modes or variations in the data distribution, resulting in a lack of diversity in the generated samples. It’s akin to painting a picture with a limited palette – no matter how skilled you are, some nuances may be missed.

Additionally, GANs are sensitive to hyperparameters and architecture choices, requiring careful tuning and experimentation to achieve optimal performance.

Getting Started with Generative Adversarial Networks

GANs are increasingly becoming a common framework for building AI applications. Software tools like IllustrationGAN and CycleGAN leverage this technology to power complex content creation and manipulation tasks. However, it should be noted that GAN is still evolving, and tools like IBM GAN Toolkit and GAN Lab are making it possible for developers and enterprises to weave it into their workflows.